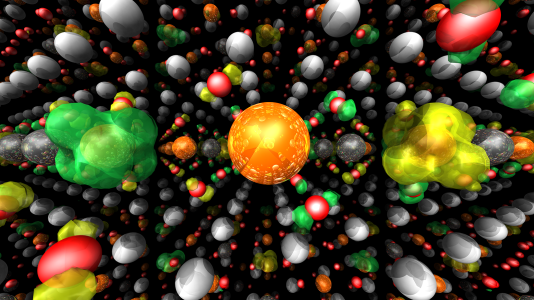

Researchers are using the QMCPACK code to accurately predict complex materials properties, such as the electron spin density of cobalt-doped silver nickel oxide delafossite, depicted in this image. (Image by ALCF Visualization and Data Analytics Team.)

Researchers will use the exascale system and AI to speed up the search for new materials for batteries, catalysts, and other applications.

By Jeremy Sykes

LEMONT, Ill.—The U.S. Department of Energy’s (DoE) Argonne National Laboratory is building one of the nation’s first exascale systems, Aurora. To prepare codes for the architecture and scale of the new supercomputer, 15 research teams are taking part in the Aurora Early Science Program (ESP) through the Argonne Leadership Computing Facility (ALCF), a DoE Office of Science user facility.

With access to pre-production time on the system, these researchers will be among the first in the world to use Aurora for science. Through the Aurora Early Science Program, researchers are preparing to leverage Argonne’s exascale supercomputer to model the behavior of materials at an unprecedented level of detail.

Rapidly evolving supercomputing and artificial intelligence (AI) technologies are giving researchers powerful tools to speed up the search for new materials that can improve the design of batteries, medicines, and many other important applications.

With the potential to simulate and predict the behavior of countless materials and molecules in a fraction of the time of traditional methods, next-generation computing hardware and software promises to revolutionize efforts to develop new materials with targeted properties.

“The power of exascale supercomputers, combined with advances in artificial intelligence, will provide a huge boost to the process of materials design and discovery,” said Anouar Benali, a computational scientist at Argonne National Laboratory.

Benali is leading a project to prepare a powerful materials science and chemistry code called QMCPACK for Argonne’s upcoming Aurora exascale system. Developed in collaboration with Intel and Hewlett Packard Enterprise, Aurora is expected to be one of the world’s fastest supercomputers when it is deployed for science. QMCPACK is an open-source code that uses the Quantum Monte Carlo (QMC) method to predict how electrons interact with one another for a wide variety of materials.

“With each new generation of supercomputer, we are able to improve QMCPACK’s speed and accuracy in predicting the properties of larger and more complex materials,” Benali said. “Exascale systems will allow us to model the behavior of materials at a level of accuracy that could even go beyond what experimentalists can measure.”

Benali’s ESP project complements a broader effort supported by DoE’s Exascale Computing Project (ECP). Led by Paul Kent of DoE’s Oak Ridge National Laboratory, the ECP QMCPACK project is dedicated to preparing the code for the nation’s larger exascale ecosystem, including Oak Ridge’s Frontier system. The QMCPACK development team includes researchers from Argonne, Oak Ridge, Lawrence Livermore, and Sandia National Laboratories, as well as North Carolina State University.

“One of the goals of this project that we’re really excited about is providing the capability for really accurate and reliable predictions of the fundamental properties of materials,” Kent said. “This is a capability that the field has been lacking. The sorts of properties we’re interested in are things like, what is the structure of the material? How easy are they to form? How do they conduct electricity or heat?”

“For example, we could look at a battery electrode or a catalyst and learn something about how they’re working and also get some insight into how they might be improved,” Kent added. “These methods can really help us improve a lot of technologies.”

When investigating new materials, understanding how a material’s atoms and their electrons behave is extremely important. Their interactions give rise to a material’s structure and properties. However, simulating materials at this level becomes highly challenging for complex systems with a large number of electrons.

That’s where QMCPACK comes in. The code, which is publicly available, is particularly good at predicting the properties of materials by solving the Schrödinger equation.

“The Schrödinger equation is basically the ultimate equation that tells us how electrons behave in the world,” Benali said.

Compared to other simulation methods that rely more on approximate values, the QMC method brings a much higher level of accuracy to computing the Schrödinger equation. But achieving that precision, along with simulating large numbers of electrons, demands a lot of computing power.

“Some of the materials and properties we are targeting are incredibly computationally costly to simulate accurately—that’s the trade-off compared to other methods—but of course, this is where exascale has its role,” Kent said.

Ultimately, their computations will help guide and speed up experiments aimed at discovering new materials. The QMCPACK team works closely with experimental groups to help pinpoint strong candidates for various applications for testing in a laboratory.

“We want our experimental colleagues to be able to focus on a shortlist of the most promising materials,” Benali said. “So having reliable simulations is becoming an increasingly important part of the materials design and discovery process.”

One of Benali’s initial research goals for Aurora was to use QMCPACK to find high-performing materials for microchip transistors. But as the software grows and improves and Aurora’s potential becomes clearer, the team’s goals have expanded to include searches for materials and molecules for batteries, hydrogen storage, drug design, and other uses.

“The amount of applications we can look at is almost endless,” Benali said. “And this is all thanks to these extremely large computers that will allow us [to] investigate functional materials at an unprecedented level of accuracy.”

In preparation for Aurora, the team has been using existing supercomputers and early exascale hardware, including the ALCF’s new Sunspot testbed. Sunspot is equipped with the same Intel CPUs (central processing units) and GPUs (graphics processing units) that are found in Aurora. The team has done extensive work to improve the code’s performance and functionality on exascale hardware.

“We redesigned the application under the ECP project to support computations on both CPUs and GPUs seamlessly,” said Ye Luo, an Argonne computational scientist and one of the lead developers of QMCPACK. “We designed the algorithm and code architecture to be portable across different systems so it will run efficiently on any supercomputer.”

Their work to restructure the code is expected to pay dividends beyond the first generation of exascale systems. Thanks to work done under the ECP and ESP projects, Benali said it will require less effort to port QMCPACK to future supercomputers.

With the exascale era now upon us, Benali looks forward to using the improved code on the world’s most powerful computers. Leveraging AI to supercharge materials discovery is one emerging capability that the team is particularly excited about.

“With the boost we’re getting from exascale machines and our software, we’re now at a point where we can work together with AI and machine learning specialists to reverse engineer material design, instead of trying everything at the simulation level,” said Benali. “If we know which properties we need for a particular application, we can use AI to scan for promising materials and tell us which ones to investigate further. This approach has the potential to revolutionize computer-aided materials discovery.”

The Argonne Leadership Computing Facility provides supercomputing capabilities to the scientific and engineering community to advance fundamental discovery and understanding in a broad range of disciplines. Supported by the U.S. Department of Energy’s (DoE’s) Office of Science, Advanced Scientific Computing Research (ASCR) program, the ALCF is one of two DoE Leadership Computing Facilities in the nation dedicated to open science.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation’s first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state, and municipal agencies to help them solve their specific problems, advance America’s scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy’s Office of Science.

The U.S. Department of Energy’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.

Jeremy Sykes is a freelance writer who writes about high-performance computing, computer science, mathematics, and engineering.

Source: https://www.anl.gov/article/argonnes-aurora-supercomputer-set-to-supercharge-materials-discovery